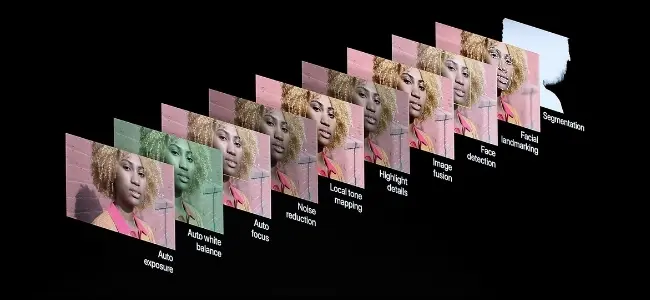

And Enhance!! (from Blade Runner ), that’s Computational Photography .

Computational photography describes signal processing techniques and algorithms that allow computers to replicate photographic processes like motion - blur correction , auto-focus ,depth-sensing , zoom and other features that would otherwise be impossible without optics ,while some of these processes use artificial intelligence techniques, Computational Photography is more than just AI , it involves a series of process like that takes an image from the Ones and Zeros on captured by image signal sensors and process to the final image displayed on screens . This article is going to be majorly focused on some computational photographical techniques employing AI. Smartphone Cameras have compensated for their hardware limitations due to the limited space to fit actual optics (like movable lenses to alter focus or depth of view ), and the limitations that comes with the technology behind digital cameras (CMOS sensors) , with the enormous computational power of their processors and have had to use clever algorithms to provide features like Zoom, Object-sensitive focus among others. These algorithms have incorporated some AI techniques in recent times to provide some unimaginable features like taking Google pixel’s night mode that allows you to take high definition pictures in extremely low-light .

...