AIpplier

An LLM-Based Web Automation Agent for automating job applications on popular job boards. Built Using Langgraph, Playwright and GPT4-O. Try it out here

An LLM-Based Web Automation Agent for automating job applications on popular job boards. Built Using Langgraph, Playwright and GPT4-O. Try it out here

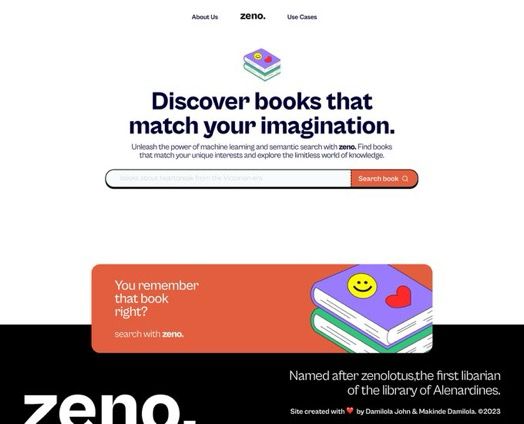

Find books about topics/ideas in your head A recommendation service that let’s you find books about any topic or ideas you are thinking about by describing them in natural language. Github repo Link

Tracking a packet from source to destination. A tool for tracing the path followed by an internet packet across the internet implemented in python. Inspired by TraceRoute the Unix tool. github

Training a 125M parameter decoder-only model on 70b tokens of Nigerian Languages. demo Article

Finetuning GPT2 to Reconstruct sentences Two words are anagrams if one can be formed by permuting the letters of the other. Applying the same logic to a sentence, would be saying that two sentences are anagrams(no such thing) if their component words can be permutated to form clones of each other. I thought it would be interesting to finetune a language model to do this. You might be thinking that simply re-arranging words in a sentence doesn’t require intelligence and can be done with very trivial algorithms,you would be right, but I added an edge to this task, given a random sequence of words, the language model has to return a grammatically correct sequence using the same set of words. For example, the following sequence: ...